Microsoft provides a lot of different services within Azure cloud. One of them is Event Hubs – data streaming and event ingestion platform that is highly scalable and capable of receiving millions of events per second with low latency and high reliability. Azure Event Hubs is a part of Microsoft’s Internet of Things services. Many people when hear “IoT” think about sport bands, Raspberry PI, Arduino or smart homes, but it’s much more than this. Thanks to usage of HTTPS and AMQP 1.0 (Advanced Message Queuing Protocol) protocols Event Hubs may be utilized by any device or application that have access to internet. At first glance, this may not look very interesting or may be even intimidating because it’s a part of world of Big Data but after taking a closer look to few possible applications you may change your mind about that.

WHEN TO CONSIDER EVENT HUBS?

Azure Event Hubs comes in handy for getting statistics and events from mobile devices.

When creating an application for tracking fleet of cars each vehicle will need to send its location every few seconds or minutes. If the app runs on on-board computer with GSM SIM card then it may be possible to get additional data, like mileage, fuel consumption or any other available data that may help to check the health of the car. Additionally, application can support user triggered events or messages. With a frequency of 1 message per 10 seconds it gives 8,640 messages daily from one car and this is GPS data only, so with a fleet of 100 cars it will give around one million messages a day.

In sports and medicine there’s also a room for Event Hubs. Sports tracker applications can communicate with wristbands or smartwatches to get heartbeat rate and GPS location from the device. In medicine, there’s a lot of patients that require constant monitoring due to diabetes or cardiological problems. With a device that is able to send heartbeat rate, blood sugar level or electrocardiogram data from Holter monitor to a data center it’s possible to analyze this data and send notifications to patient and medical personnel when something is wrong. I recommend to see Scott Hanselman’s demo for a practical example https://www.youtube.com/watch?v=u5oTz1e5qqE.

Smart homes, industry and manufacturing can also benefit from Azure Event Hubs. A set of sensors that monitor energy consumption, temperature, RPMs or resource levels will give all information needed to monitor the facility and with a support of machine learning and analytics tools gives a possibility to optimize costs and production, predict possible failures before them happen or get immediate notifications if something goes wrong.

Web, desktop, console applications, services and games can also generate a lot of events and one-way messages. Even if one instance is not generating high number of events then thousands of instances most likely will.

In all those cases, it’s possible to create own solution for handling the messages or utilize already existing message brokers like RabbitMQ, Apache Kafka or Redis. In both cases it involves additional hosting costs and the need of having servers’ staff that will monitor and manage the machines. Scalability of this solution may not be cost-effective compared to the cost of Azure Event Hubs. It’s required to buy or rent additional servers, set up the environments and load balancer and downscaling unused machines. With Azure Event Hubs with only few clicks in Azure Portal it’s possible to remove or add new throughput units with almost immediate availability.

OUR APPLICATION AND COMPARISON TO AMAZON SQS

One of our customers needed to upgrade their web analytics system to achieve better scalability, reliability and optimize costs. One of main the requirements was to process events from websites in the same order as they occurred. Additionally, they wanted to keep current storage solution and avoid data replication between data centers.

As a part of preparations for upgrade I’ve made a research and compared two cloud services – Microsoft Azure Event Hubs and Amazon Simple Queue Service (SQS). Standard Queue from Amazon was rejected due to the fact that it does not guarantee that messages will be delivered in the same order as they were sent. To achieve FIFO queue in Event Hubs it’s required to send events with the same partition key.

SQS FIFO Queue has maximum throughput of 300 transactions per second which include API calls and it’s required to create new queue to scale the solution. In Event Hubs there are Throughput Units that provide ingress of 1000 messages per second or 1MB/s and 2MB/s egress and it’s possible to add them to or remove from existing hub with maximum 20 TPUs for Basic and Standard plans.

Both services support the same maximum message size of 256kB (up to 1MB in Dedicated solution from Microsoft) and billable unit of 64kB of data. This means that 12kB message is billed as 64kB and 70kB message is billed as two units.

Amazon’s service requires consumers to delete already processed messages from the queue which, with multiple applications consuming event stream, requires to create additional synchronization framework. On the other hand, Standard plan for Event Hubs allows to create up to 20 consumer groups which allow applications to read data from stream independently with ability to create their own checkpoints used to resume reading from last saved offset after a potential failure.

In case of publishing large number of messages, it’s highly recommended implement dead-letter queue to store and retry to send messages that were not processed successfully. Microsoft does not provide such solution out of the box which is available in Amazon SQS.

Also, there are differences in billing methods. In both cases there’s a billing per million requests. Microsoft charges for ingress events and throughput unit time per hour. Amazon provides free tier with first 1 million request and 1 GB egress transfer after which customers are billed for requests and egress transfer.

Due to easier scalability by changing the number of TPUs, support for multiple consumer groups and ingress events billing, which was quite important due to the requirement of having multiple event consumers, we’ve decided to use Microsoft Event Hubs.

THE STRUCTURE OF AZURE EVENT HUBS

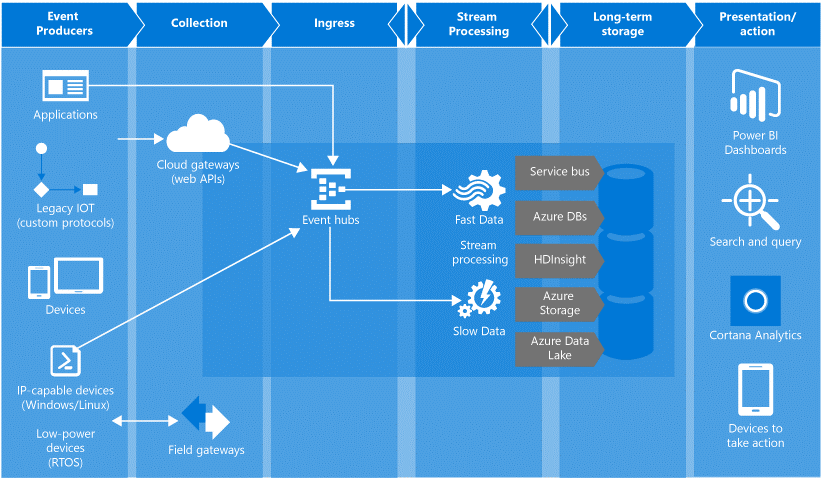

Usually Event Hubs plays a role of “front door” for an event pipeline which allows decoupling event production from the consumption. Since Event Hubs are created for high throughput and event processing scenarios they do not implement some capabilities that are available in Service Bus messaging entities, such as topics.

1. Common architecture utilizing Event Hubs and other Azure services

Messages sent to Event Hubs can be published individually or in batches. In both cases maximum size of one operation is 256 kB. With a dedicated solution the limit is raised to 1 MB. Event publishers should not be aware of partitions within the hub, so when it’s required to process events in particular order publisher should specify partition key for events.

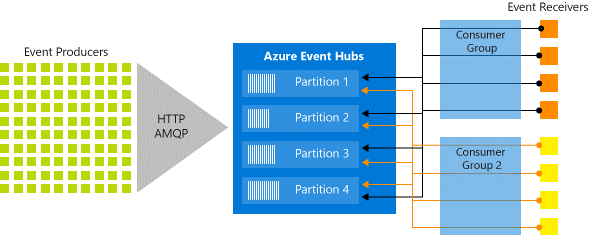

Azure Event Hubs contains a number of partitions. They are used to retain events for specified amount of time. Each partition is an ordered stream where new events are added to its end. Each hub has between 2 and 32 partitions. Their number is defined during creation and cannot be changed, so it’s important to consider long-term scale before creating new Event Hub. Partitions organize downstream parallelism required in consuming applications and their number is related with the possible amount of concurrent readers using the same hub.

2. Event Hubs stream processing

Each partition is accessible using its ID or a partition key. While the number of IDs is limited it’s possible to have more partition keys than partitions because keys are processed by hashing function that is responsible for partition assignment. Sending events with the same partition key ensures that all messages will be processed in the same order as they were sent. Events sent without partition key or to a specified partition will be assigned using round-robin algorithm.

Partitions are accessible only though consumer groups. Each group can have up to 5 concurrent readers on a partition but it’s highly recommended to have only one consumer per partition per group. Consumer groups are views to entire event hub and hold their state, position or offset. While reading data from partition each event holds offset information which represents a position in partition. Offset can be in form of timestamp or offset value. This information allows reader application to specify position from which consumer application will start reading events stream. Alternatively, there’s a possibility to create checkpoints for a consumer group which allows readers to resume reading on reconnection. Both offsets and checkpoints are related to partition, but main difference between them is that consumer applications are responsible to store offset information by themselves while checkpoints are submitted to Event Hubs.

Scalability of Event Hubs is controlled using throughput units (TPU) which are the unit of capacity. Each TPU has:

- Ingress: 1 MBps or 1000 events per second (whichever comes first)

- Egress: 2 MBps

Each hub can have up to 20 TPUs and if more of them are needed it’s required to contact Azure Support. Each throughput unit is billed for minimum one hour. Since partitions have maximum scale of one TPU is recommended to have maximum the same amount of TPUs as the number of partitions.

SUMMARY

Microsoft Azure Event Hubs is a high throughput, scalable service with low latency and high reliability. Thanks to AMQP 1.0 and HTTPS protocols Event Hubs can be used on variety of devices in many programming languages which gives vast amount of possible applications with relatively low costs. Thanks to possibility of dynamic management of the amount of throughput units it’s possible to scale the capacity of Event Hubs with only few clicks in Azure Portal without the need of managing physical infrastructure.

USEFUL LINKS

- https://azure.microsoft.com/en-us/services/event-hubs/

- https://docs.microsoft.com/en-us/azure/

- https://azure.microsoft.com/en-us/pricing/details/event-hubs/